Switch to a new blog. But this time it’s not going to be an HTB system writeup, this blog is about the technique or process for checking misconfigurations in the AWS S3 buckets of the target client and along with that there’s also a Proof Of Concept about a bug that I recently found. So let’s continue without further ado…

What is Amazon S3 Buckets?

Simply put, Buckets is a kind of storage space offered by Amazon S3 (Amazon Simple Storage Service) where registered customers can upload their data / objects using either the Amazon S3 API, S3 console or the AWS CLI and can be accessed globally with a proper interface.

Naming Conventions

Also coming to its naming scheme, all bucket names are exclusive all over the world and some other account can’t use the same bucket name until and unless the user deletes the bucket. The S3 buckets are also associated with the regions where the user needs to define the area where he / she wants the bucket to be built.

Accessing a Bucket

Any of these URL formats will reach a bucket in a browser:

Permissions

Access Control List

Finding the Bucket Name

Link to this blog for more in-depth enumeration of bucket names: https://medium.com/@localh0t/unveiling-amazon-s3-bucket-names-e1420ceaf4fa

Proof Of Concept

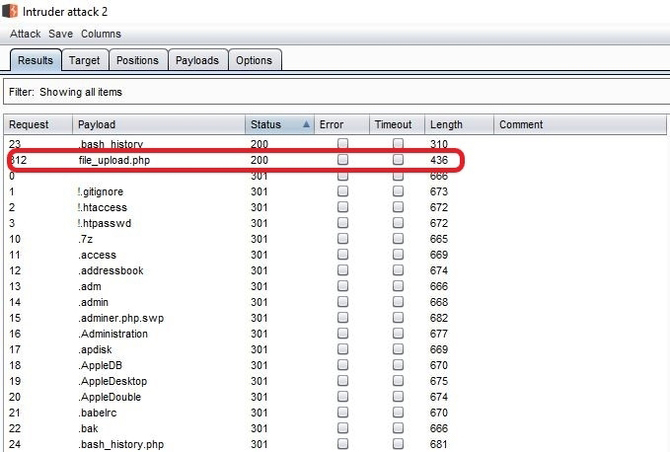

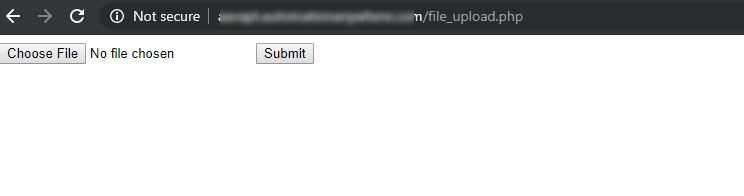

Now heading to the testing section, the website I referred to below was not a website with a bug bounty, but rather a private dedication, so I can’t reveal its name. Let’s say the URL is https://redacted.com . So I always run a quick dirb and intruder bruteforcing for specific backup files before beginning my testing on any given application, and that’s where I came across a file called file upload.php.

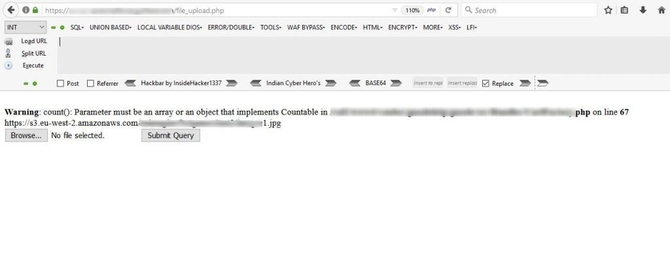

It actually brought a smile to my face as it clearly showed that the developers had neglected to delete it file before transferring it to the development so I figured it would be a good way to upload my cover. But I later realized that this function was actually broken and did not even take valid files such as image, pdf, Xls, etc. But, it always resulted in an error when I tried to upload anything and it always showed the full s3 URL along with the name of the bucket.

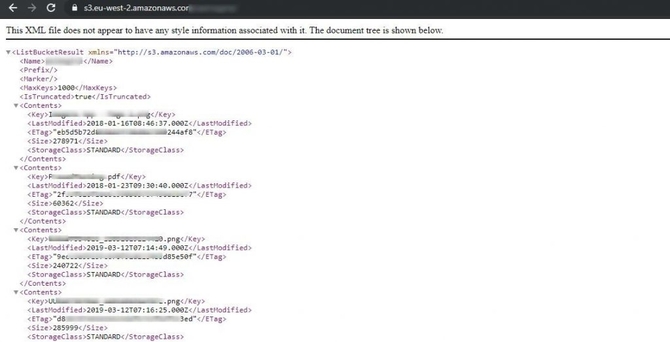

First, I tried to visit the connection in my browser and saw that the listing of directories was activated showing that everyone had access to the content of the bucket.

And now I’ve had to test whether there’s write access or not to upload my own files. To do this, I quickly installed and configured the aws cli device in my kali.

For installing aws cli: apt-get install awscli

Then we have to set it up by typing in:

aws configure

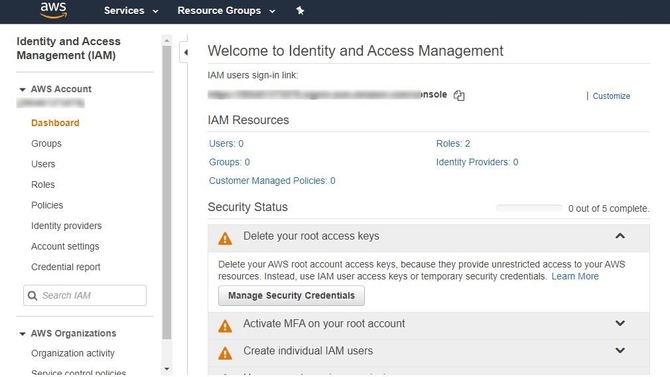

And 4 parameters will be requested: access key I d, secret access key, area, output format.

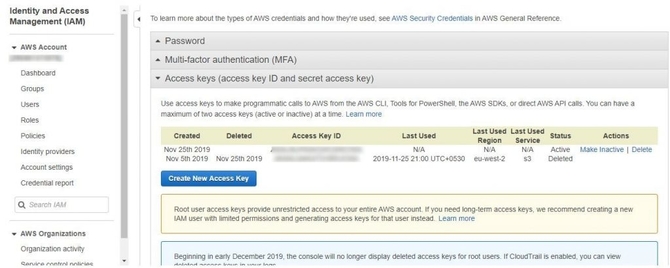

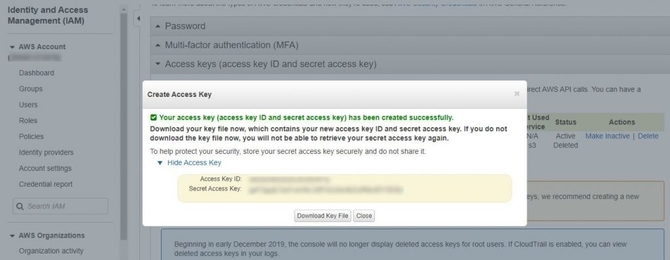

* You would then be able to create your new access key under the access key tab, which would give you both the access key I d and the hidden access key.

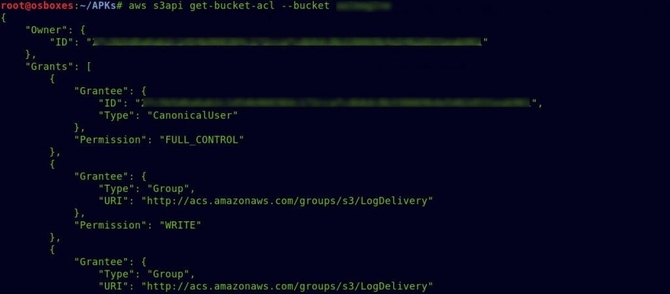

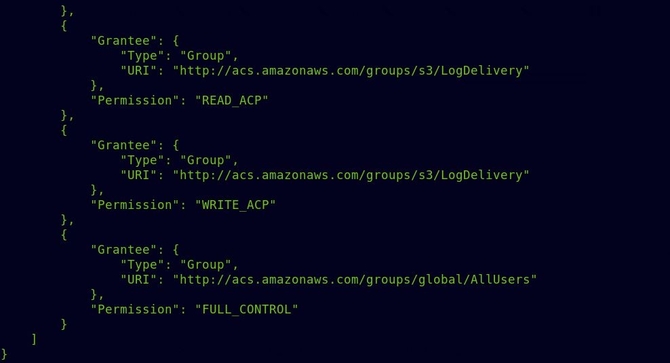

* You may define the defaults for region and output format as specified in the command itself. So I quickly typed the bucket ACL in the command below after all the configurations had been done: aws s3api get-bucket-acl –bucket bucket_name

And as you could see from the above picture, Full Control for the bucket was allocated to the AllUsers category.

And I tried to upload a text file using the command without wasting any more time. aws s3 cp random.txt s3://bucket_name

With the ls command, we can list all files:

aws s3 ls s3://bucket_name

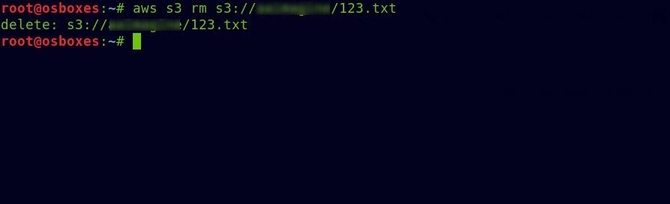

Okay, so the file was uploaded successfully. First, I was trying to remove it. The same rm command used in Linux systems can be used to delete a file:

aws s3 rm s3://bucket_name/file.txt

Successfully removed the text file.

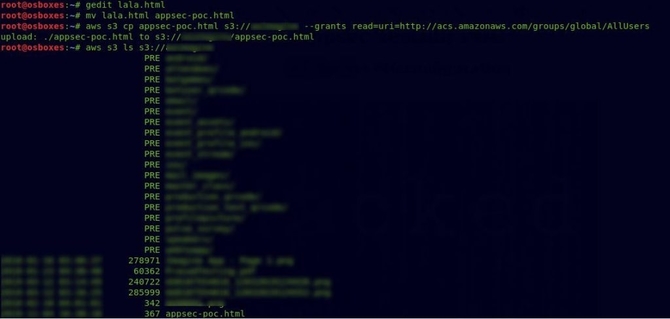

So I made a fast-looking HTML page for the final POC and uploaded it over the bucket and gave it permission to read it.

Burp Plugin

A Burpsuite plugin called the Virtue Security-created AWS Extender could also be used to check for misconfigurations. The measures of configuration are almost the same as Access Key, Secret Key, etc. Follow this link for more information: https://github.com/VirtueSecurity/aws-extender

Impact

The potential impact was immense as the bucket contained a lot of sensitive information about a conference that was about to take place after a few months and any anonymous user had full control of the bucket and was able to delete and change any item in the bucket And that being said the company has a huge customer base and deals with around a million customer’s data, so any mishappenings could have cost a lot to the company.

Mitigation

Bucket’s ACL must be properly configured, as you might already have guessed, and Full Control should never be assigned to the AllUsers. Here’s a good blog about setting your buckets to secure ACLs:

References

I feel that explains it all.

So for now, that’s it. Next time, see you. Goodbye to you

Discover complete cybersecurity expertise you can trust and prove you made the right choice!